If you’ll remember from a set of posts last year, I had floated the idea of mining malware for evidence of automation system compromise. The basic premise was to look for the evidence of interactions with control systems by analyzing malware samples graciously sent to me by @VXShare.

The project that I eventually embarked on was… ambitious. It involved parsing out the strings data associated with (initially) the 2 TB of @VXshare malware samples I had immediate access to, and shoving them into a database for searching. The intent was to get an infrastructure set up that would allow ready searches of the entire dataset. From this point, searches could be generated for various ‘indicators’ of an automation system, such as specific IP addresses and ports, usernames/passwords, hostnames, DLL files, extensions, etc.

If you’ve been following along, you’ve already noticed the flaw in my plan that I missed at the beginning; fundamentally, building an infrastructure is neat, but is not the real intent of the research. The real intent is generating the data for searches so that the malware targeting automation can be identified from static code analysis. Unfortunately, I went very far down the infrastructure route before finally turning attention back to generating appropriate searches based on automation programs. Then, life and other work intervened and I eventually shelved the project.

While planning this massive undertaking, I decided the computer systems I had at my disposal would not be enough. With this in mind, I headed to ebay and picked up a used Dell 2950 on my own dime (I’ve always wanted my own powerful server), with 8 cores and 16 Gb of RAM. In my mind, this would be enough to do just about anything I needed as far as processing power. One Ubuntu install la… Well, TWO Ubuntu installs later, I was ready to get crackin. Got MySQL installed (all the Big Data folks are furiously shaking their heads now), and linked it up to the Python scripts I put together for pulling information out of the malware samples.

I had built a very basic database design for the incoming information, and hadn’t taken into account the sheer amount of data I would end up processing. I was looking basically for any string in the malware sample, which is great if you assume an executable. But, a large chunk of the malware samples are actually PDFs, which means that nearly every byte in the PDF is a string, and some can go on for 200, 300 bytes, or longer even if it’s a nonsense string. My basic database design didn’t account for this eventuality, causing it to swiftly balloon in size, or crash out due to length limits being exceeded during import. Because I originally considered the operation to be straightforward, I hadn’t built the scripts to politely handle these issues. I would kick off a job before I went to bed, and it would eventually hit a PDF and die, causing me to lose a chunk of work. I tried compensating by increasing the max length of the data base field, but eventually found it was a losing proposition; there would always be a PDF with a string just slightly longer that would crash the process. I eventually removed PDFs altogether, removing the capability search email phishes for use of automation terms.

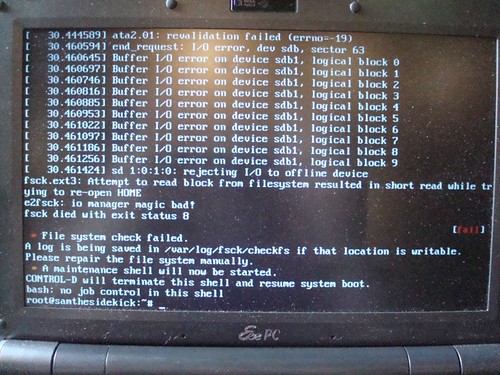

Additionally, even though many jobs would finish successfully, another problem I was encountering was the size of the generated database. I originally thought that strings data from malware samples would be relatively small compared to what I was analyzing, on the order of 2-5% (or around 100 gigabytes max). Well, this was WAY off, as I was getting around 100 gigabytes of processed data after 3 of the 50+ samples. I ended up pulling harddrives from my file server to host the database, and moving the database to those hard drives. Have you ever moved a MySQL database to a different physical drive? No, of course not, you’re not a database admin, you’re a cyber security professional, why would this be something you’d ever need to do?

After this, the database would chug away on the data with only a little intervention. I’d log into the box remotely and check the status of a job from time to time, but the imports generally just slowly entered the database. Very slowly. As in so slowly, it would take 2 months to completely load the system with all the samples. This is when I encountered another fun problem, the fact that searching all these entries was going to take forever due to my lack of normalization in the database.

Eventually, my secondhand 2950 failed me, a power supply failed and helped fry a CPU, bringing the major infrastructure activities to a screeching halt. I eventually went back to study the main problem, pulling appropriate information to help identify malware that had an automation bent.

The major advice I have for researchers and engineers conducting similar research is simple:

1. Make sure you stay true to your original intent, don’t go off on tangents

2. If your project looks like it requires skillsets that are out of your usual work, take a step back and reanalyze what you are attempting to do. There may be a simpler way.

3. Avoid building infrastructure, unless the point of the research is to build infrastructure.

Not everything about the malware mining work was unsuccessful, but I wanted to get the lessons learned out of the way first.

title image by emmajanehw