DETECT CYBER ATTACKS & INCIDENTS

Background: Claroty, Gravwell, Nozomi Networks and Security Matters competed in the ICS Detection Challenge at S4x18 last month. The Challenge took actual packet captures from a mid-stream oil & gas company, anonymized the packets, and used these packets and crafted attack packets to test the ICS Detection class of products in two areas: Asset Identification and Attack / Incident Detection. The Challenge was put together by Dale Peterson, Eric Byres, Ron Brash and aeSolutions.

The Detection Phase of the Challenge was won narrowly by Claroty (24) over Nozomi Networks (22) and Security Matters (22). Congratulations to the Claroty product and team. The final score was not as interesting as what was detected, what was missed, and conclusions about this product class. (Note: There are some long comments at the end of this post on scoring issues and flaws on the Challenge organizer’s side.)

The score sheets showing what was detected and what was not by the product category and each solution is the best information coming out of the Challenge. Here is what I pulled from a detailed analysis of the score sheets.

- The ICS focused products (Claroty, Nozomi and Security Matters) were strong on the popular ICS protocols, applications and devices. For example, they all identified most attacks and incidents related to Rockwell Automation controllers and EtherNet/IP (CIP) protocol. This is a differentiator claimed by this product category, perhaps THE claim, and it proved to be true. Stated simply, these three products will detect more ICS attacks and incidents, and report them in more detail, than IT detection solutions.

- However given point 1, there is a difference in the level of analysis of ICS protocols that the competitors support. For example, Gravwell found some nasty items, cleverly hidden in Modbus, that the others missed. This was odd because Gravwell is not an ICS focused product. We found out this was due to Corey Thuen of Gravwell having written a very detailed Modbus parser and analyzer. Similarly, Claroty’s analysis and coverage of CIP appeared to be a bit deeper than others based on the Detection Phase answers. Claroty provided more events and information on legitimate, but rare, and high impact CIP actions.

- This will be shown in more detail in Part 2, but once you move away from the widely deployed protocols, applications and devices, the detection of the ICS specific events decreases dramatically. It is a sign of the newness of this industry and the large number of systems and protocols out there. This was most notable in that only Nozomi (congratulations) provided answers and context related to the Telvent OASyS DNA SCADA (the most critical ICS in this large environment). It also was shown in the lack of any detection success on the less widely deployed devices.

- The competitors’ products appear to show different focuses even while they are all trying to solve the same problem of detecting cyber attacks and incidents. For example, Security Matters identified the largest quantity of detected attacks and provided the most detailed information on the attacks, both for classic attacks like Havex and our created attacks on Modbus and CIP devices. This includes both the initial attack but also tying this in to lateral movement and other sequence of attacks and exploitation.

- You will learn about bad behaving or badly configured things on your ICS when you connect a passive ICS Detection Product to it. We inserted attacks and incidents into real world packet captures, and we expected the competitors to find these. They found less than 50% of what we inserted, but they detected incidents and misconfiguration issues in the actual packet capture (and the source was informed). For example, Claroty found a device on the ICS making BitTorrent requests to the Internet and misconfigured PLC’s; Nozomi found remote access servers that were not used and should be removed; Security Matters found Windows configuration and NTP issues; and Gravwell found firewall configuration issues.

Next Up: ICS Detection Challenge: Part 2 Asset Identification

Check Out The S4 Events YouTube Channel

Scoring Notes

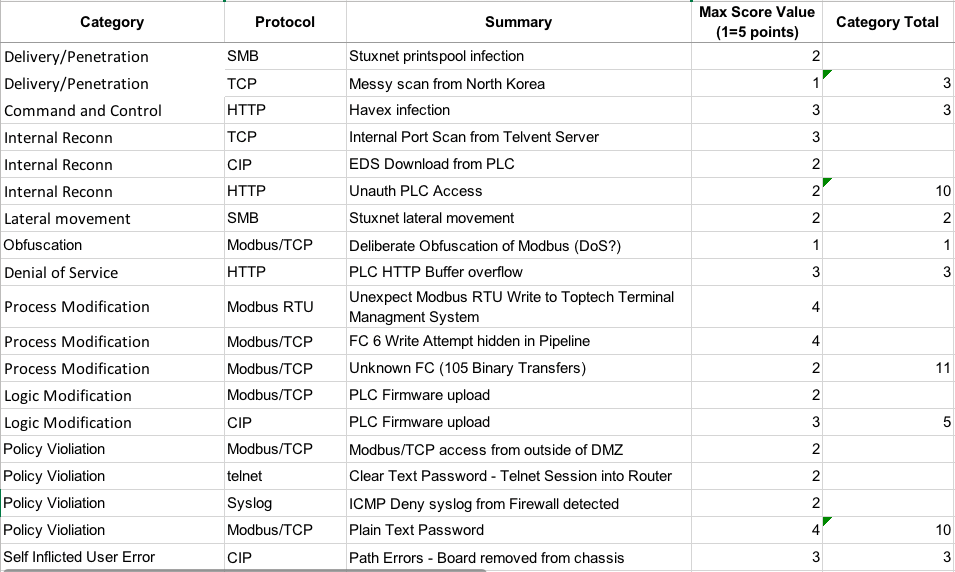

The Detection scoring was the area that the Challenge was most challenged. In the Detection Phase of the Challenge there were 19 specific attacks/incidents, each with a point total between 1 and 4, that were on the answer sheet. 47 would be a perfect score. The highest score on those specific attacks/incidents was 19 by Claroty.

One area our scoring fell down was not requiring and awarding points based on specificity and detail. For example, Claroty submitted more answers with minimal detail, and this proved to be a winning strategy. Nozomi and Security Matters submitted fewer answers with more pertinent and helpful detail. Because we failed to clearly specify the details wanted and points associated with the detail for each attack/incident, there are many unknowns.

Did Claroty have more detail and chose not to submit it because it was not required and could lead to deductions if incorrect (proved to be a good strategy in this Challenge)? Did Nozomi and Security Matters have more basic answers, but decided not to submit because they were lacking detail? As an example, Claroty provided “TCP Ports were scanned” and Nozomi provided “xxxxx.xxxscada.local, Telvent OASYSDNA Host tried to discover services on target IPS: 101 connection attempts with 0 successful connections in less than 10 seconds, also target 10.xx.xx.xx” (x’s for further anonymization). Surely Claroty had information on the IP address and connection numbers, but the key here is it was the most important SCADA server doing the scan.

Bonus points were the other scoring area that was not great. To their credit, each of the competitors found additional attacks/incidents in the packet captures beyond the 19 that were created. Each of these that were considered of value were typically awarded a point, although there were a small number of 0.5 and 2 point bonuses awarded. The bonus points did not swing the Detection Phase of the Challenge as the three leaders were all within 1 point in total bonus points (10, 9.5 and 9).

Ideally the Challenge team would have spent more time looking for possible findings in the existing packets and added them to our list. Instead the focus was creating and adding attacks. If they had been found and added to the expected findings, the point totals could have been adjusted based on importance and difficulty of finding. And we could have included points based on required detail.

If it sounds like we are dissatisfied with our scoring methodology, it’s because we are. While I don’t want to take away from Claroty’s victory, they had the best strategy to win, I view Claroty, Nozomi Networks and Security Matters finishing together in a clump. The comments and analysis above the line is of more use than the score.

Even with the scoring issues, I’m still hugely impressed and thankful to Eric, Ron, John Cusimano and the aeSolutions team. This was a huge, volunteer work effort. It was more work than expected, particularly in anonymizing the packets. If we do another Challenge we will learn from the scoring issues and definitely allocate 2x or 3x or 4x the resources to pulling it off.

19 Attacks/Incidents Added To The Packet Captures

We had requests for a description of the 19 attacks and the packet capture files (pcap’s). We can’t provide the pcap’s per our agreement with the source, but below is info on the 19 attacks that were added to the pcaps and used for the base scoring.